Disclaimers

- This Blog post is for fun. Do not self-diagnose!

- Do take it with a grain of salt.

- The technics mentioned here were relevant when I was a Red Team operator. Things might have changed by now!

- Non-technical people reading this (for any reason): skip the jargon, check the references! You could analyze any profession in a psychological tongue-in-cheek way too!

Setting: A Hypothetical Job Interview for the role of “Senior Red Teamer Operator”

Characters:

- Interviewer

- Interviewee (John)

Part 1 – The Addiction Part (kind of)

– So John, can you answer me this one question, what really happens when an implant is double-clicked in an assume-breach scenario?

– Of course! Can you hint me on what transport is the implant using to reach the C2 server? Is it DNS? HTTPS? anything more fancy?

– Let’s assume it’s HTTPS with Cert Pinning. Are you familiar with that?

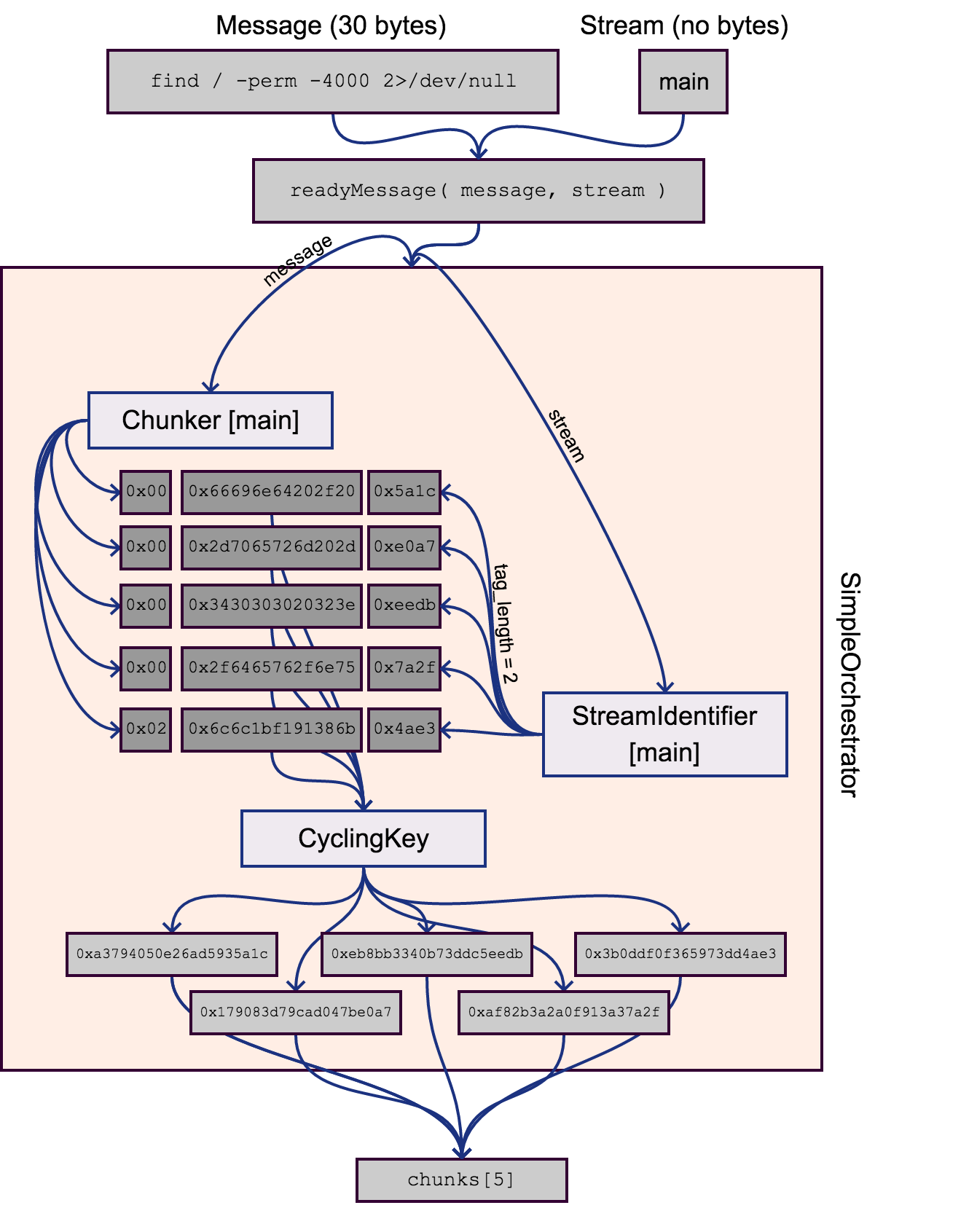

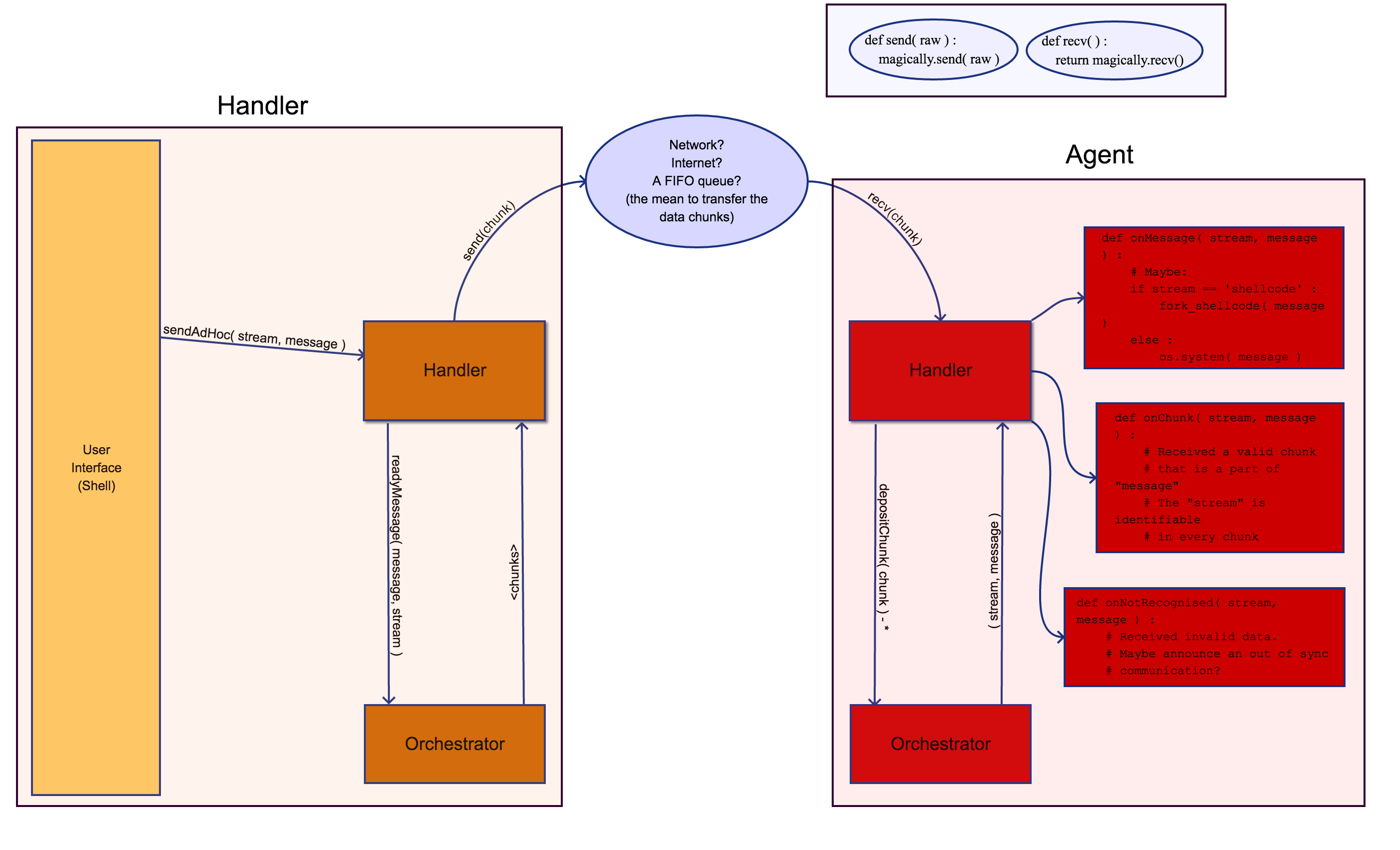

– I sure am! So yeah, the implant when double-clicked starts a process in the host, it can be directly executing the malware code or plant it somewhere to be executed at a later time like COM hijacking on Windows. Eventually, maybe after self-decrypting its main components, the malicious code attempts to reach the C2 server. So, it most probably goes with DNS, resolving the C2 server’s IP address from a Domain Name embedded or calculated in its code. Then it starts a TLS handshake with the C2 server (or a reflector). The C2 Server provides a Server Hello TLS message attaching a x509 Certificate and the malware checks whether the Certificate matches with the “Pin”, a value which is embedded to the code and most of the time is a SHA2 hash. If all goes well, the rest of the TLS Handshake takes place and this is the point that the Red Team operator used to get a message like “Meterpreter session opened (session N)” but now they get something like “Host called home” or anything along these lines…

– That’s a brief but sufficient ans…

– I’m not done! This message is printed to the Red Team operator’s screen through some call which ends up to the drawing system of their GPU and the screen’s pixels change in a way to symbolize one of the phrases I just stated. The operator’s eyes then, perceive this change and the image goes directly to the Frontal Lobe of their brain for decoding. This is happening super fast! Keep in mind I’m skipping a lot of stuff here, like the retina’s decoding from light to electricomagnetic signals sent to the Optic Nerve and the flipped image trigonometry. I can explain these later if you wish, now…

– I think that’s way more than en…

– So yeah, the image is processed in the Visual Cortex of the brain, the words are, we could say, “parsed”, but I’m skipping some stuff here, and then are passed to Middle Temporal Gyrus (or MTG for short) of the Red Team operator’s brain that makes him realise what the phrases printed in the C2 server mean, and they mean that a shell just called home!

– John, we could skip to the next que…

– That’s actually the best part! Now that the operator realises that they got a shell, one thing happens before everything else related to Lateral Movement, checking if the host is Domain-joined and the rest. The operator’s neuroreceptors get a huge pinch of Dopamine in his midbrain, which effectively does two things: first sets the operator in a very good and motivated mood and second, with the help of Glutamate they take note on how exactly they got this shell, making them a better Red Team operator, hence making it more possible for them to feel that good again in the future. That might lead to a promotion leading to even more Dopamine! We could say, at this point, that the Red Team operator is a junky for shells! Isn’t it amazing?

– This was actually a very weird way to explain a connect-back! I have another question for you: How would you continue if you realized that the phishing campaign you sent didn’t go well?

Part 2 – The Conditioning Part

– Oh, what do you mean? Did we get any HTTP canary, did anyone even open the email? Did we get a shell and it was insta-killed?

– Let’s say you get no shells and none opened your emails. Radio silence kind of thing!

– Oh then, I’d check if the email pretext is good enough. It has to be urgent and formal just enough to push someone towards the wrong decision of clicking the malware. I could talk for hours on phychological violence of phishing campaigns and the general ethincs of the Red Teamer, anyway…

– Let’s assume that with the changed pretext the email gets opened but you’re not getting a shell?

– I’m really glad you are asking me this question! You know there was this guy called B.F Skinner, who has been the father of Behaviourism, the concept that we, as a species, are kind of animals that form our behaviour mainly through conditioning and our reward system! So this guy…

– John, could we please skip to the technical part now?

– Sure. So I’d get from the Information Gathering phase what kind of AV they’re currently using and try creating AV-bypasses for this specific AV by hand.

– And if you are getting a shell now but it dies on you in seconds?

– So, this Skinner guy made an experiment by putting rats and pigeons in a box. He used conditioning to make them learn to press a button in that box. They learned that by rewarding them with food every time they pushed that button. Really, not every time, and that’s exactly the catch! The animals got more conditioned to the button when he didn’t provide food in every button push, but just sometimes!

– John, can you please tell me why the shell dies? I find all these things really interesting but kind of irrelevant!

– The interview is for a Senior role, so I believed I had to explain full-stack. I’ll try to be more brief. My point is that the Red Team operator is getting kind of Skinnerbox’d, on learning how to land a working shell, by not getting it most of the time. And also, by waiting random amounts of time until they get the reward. So, if I had to fix that malware I’d finally need to write some code!

– Glad to hear that! What kind of code?

– C++ or C# most probably. The shells often die because of some Post Exploitation operation that is executed and is identified, traced and blocked by Endpoint Protection software on the host. So I’d need to get the same software in a Virtual Machine, run the same malware, do the same Post Exploitation actions and see where and how it gets killed. Then I’d try to either patch away the EDR’s DLLs from the process memory, as some of them work on userland, or change the order of the malware’s syscalls, as these are the ones that tend to be signature’d by EDRs.

– I see. Can you explain how you’d write the code.

– I guess I can! I’d run my IDE, most probably VSCode for these languages and do changes to the original malware. I’d use Git to keep all code changes tidy, maybe a branch per target, and then fail to compile a bunch of times. When the code compiles I’ll run the malware on the VM, do the actions and see if it gets killed. If it does I’d go back to square one, trying something else.

– That’s a bit generic but I get your idea.

– This continuing cycle of searching Windows API docs, coding, compiling, running and getting, or not getting a reward is the basis of it all. It is a Skinner box as well! I do something and I might get rewarded. This hooks me into trying it more and more, so I’ll eventually manage it! Monkeys could even author Shakespeare exactly this way!

– Your approach is really optimistic John. I like that. It all sounds so based, but in a really absurd way! So there is this question now: how would you get notified when you get a shell?

Part 3 – The Compulsive Part

– This brings me to this other topic! By now, having done so many things for this assessment, I’d totally be obsessed! It has to work, right? I mean, I wordsmith’d the pretext, modified the code, tested rigorously, launched the phishing campaign and all. So every minute I don’t get a connect-back must be painful by now!

– So, would you set up some Slack notification or something?

– There are plenty of ways! I can continously stare at my screen, waiting for the shell to come. But, as some persistence technics require reboot to work, we really have no clue how long it can take to get a shell, or if they’re going to work at all. So most C2s have notification systems, for Slack or Telegram messages on every new connect-back. I’d use one of those. I could also check the Payload Delivery server logs. I didn’t mention it, but we are totally keeping those! So, every some minutes I check my Slack or Telegram or the tail -f of the logs, as I might have missed something when I went to the freezer to get water, or when I went for a smoke.

– This is a lot of dedication! I like that!

– Probably you shouldn’t. Checking all the time is a part of the job that is not useful and is kind of addictive as well. Like checking socials or messages on your phone all the time. You could even say that is the compulsion part of an obsession. Because, Red Team operators more often than not get obsessed with landing in the target organization. It gets very personal. You could argue that it is passion, and sometimes it really is that, but I’d say it easily becomes an obsession. And compulsion follows obsession sooner or later! It can loosely be seen as an addiction too!

– Wait, you are saying that the Red Teamers have OCD?

– No! You can have Obsession-Compulsion cycles without having an OCD, just like you can have narcissistic traits without being a narcissist. The thing is that I believe these cycles make the professionals less effective, which is counter-intuitive, but I can explain.

– I don’t know if this an interview anymore.

– It really is a blog post, but let’s continue pretending for a moment – it’s gonna end soon!

– Ok, go ahead. If this way of working is ineffective, as you say, what is an effective one? To keep the interview format, what new ways would you bring to the Team in case you join?

Part 4 – The Cure for this Profession

– If I join the team I’d work in a very specific way. My way really comes from Buddh…

– I was really afraid we’d end up to something like this.

– It’s not a religious belief though. It is about not merging oneself with the outcome. Not valuing myself as a person from whether I managed to send a piercing phishing campaign or a well-baked payload. It might seem bad at first, but really relieves me from a lot of pressure I’d put on myself. Without this pressure and mental overhead I can really, calmly, hack them. My mind’s CPU can stop spending cycles on my self-worth and I use those cycles to better think of a good spear-phishing target or create a solid Malleable Profile.

– This is a very philosophical point of view Jo…

– Thank you, sir!

– I was saying that I appreciate it, but I’d like to ask if there is a more practical example of what value you could add to our team?

– Well, I speak Terraform fluently, as I like the preparation phase. For me, preparation is the best part of an assessment as the reward cycle hasn’t started yet and so the actions a Team takes are calm and measured. It is when the rewarding phase comes that Red Teamers lose their cool. They login from home, they do OpSec unsafe stuff, they run Powershell hoping to not get caught that one time. And that’s why they fail. These actions are reckless ways to get Dopamine. It’s their midbrain loosely overriding their Frontal Cortex that knows that Elastic SIEM has a bunch of rules that booby trap Powershell, and that DCSyncing from any Domain Admin user is easily detected.

– Thank you John for your time. We’ll send you an email later today on how we can proceed with the hiring process.

– Really? we just started… We are like 15 minutes in!

References

Addiction Neuroscience 101 – YouTube

The Neurobiology of Addiction Addiction 101 in Olson – YouTube

The Skinner Box – How Games Condition People to Play More – Extra Credits – YouTube